Healthcare data breaches now cost organizations an average of $4.45 million per incident – nearly double the cost in any other industry. Yes, you heard it right. While other industries scramble to protect customer credit cards, healthcare developers are protecting something irreplaceable: medical histories, psychiatric records, and genomic data that can't be changed like a password. The stakes are different here. One misconfigured database, one unencrypted backup, one developer who copied production data to test locally – any of these can trigger investigations, lawsuits, and penalties that kill entire companies.

But HIPAA compliance in 2025 isn't what it was even five years ago. The entire industry has shifted. Cloud-native architectures mean PHI flows through systems in ways HIPAA's original authors never imagined. AI models train on patient data, telehealth platforms stream sensitive conversations, wearables constantly upload health metrics – each innovation creates new compliance challenges that outdated playbooks cannot handle. This guide cuts through the legal jargon to deliver what developers actually need: a practical roadmap for building HIPAA-compliant healthcare software from the ground up. We'll cover everything from pre-development risk assessments to post-deployment monitoring, common pitfalls that tank compliance audits, and how emerging technologies like AI and cloud services fit into the compliance framework.

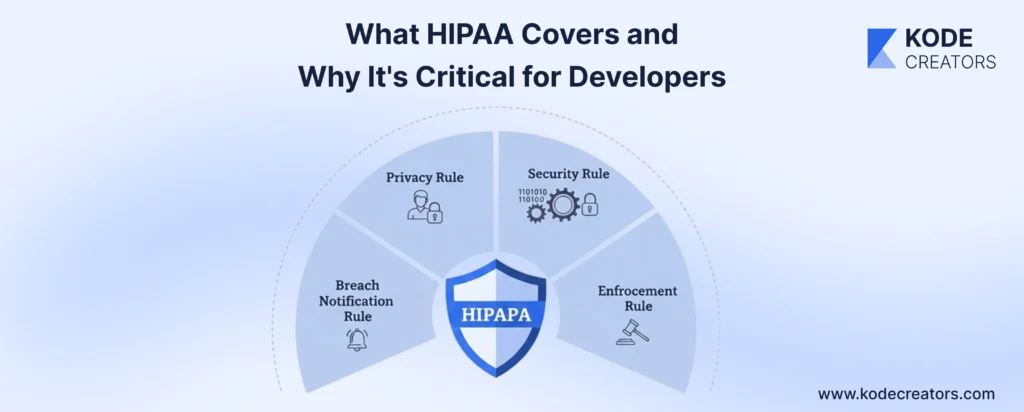

What HIPAA Covers and Why It's Critical for Developers

HIPAA breaks down into four rules that directly impact your code: Privacy (who can access PHI and how), Security (technical safeguards for electronic PHI), Breach Notification (what happens when things go wrong), and Enforcement (the penalties that keep CTOs awake at night). For developers, the Security Rule is where rubber meets road – it mandates encryption, access controls, audit logs, and integrity controls that translate directly into architectural decisions and code implementations.

Here's who's on the case: covered entities (hospitals, clinics, health plans), business associates (anyone handling PHI on their behalf), and increasingly, healthcare software vendors. If your app touches patient data – even indirectly through APIs – you're likely a business associate. That smart scheduling app syncing with practice management systems? Business associate. Is the AI model analyzing anonymized patient outcomes? Still might qualify if re-identification is possible. Even a simple patient portal storing appointment histories needs full compliance.

PHI isn't just names and Social Security numbers – it's any health information that could identify someone. Your app's seemingly innocent features are PHI goldmines: appointment scheduling reveals when someone seeks treatment, prescription refill reminders expose medications, and EHR API calls pull entire medical histories. That fitness tracker integration? PHI. Chat messages between patients and providers? PHI. Even server logs showing user IDs accessing certain features can constitute PHI if they reveal health-related behavior patterns. Every feature you build either creates, transmits, stores, or processes PHI, and HIPAA governs every single interaction.

2025 Context: Emerging Tech and Updated Compliance Pressures

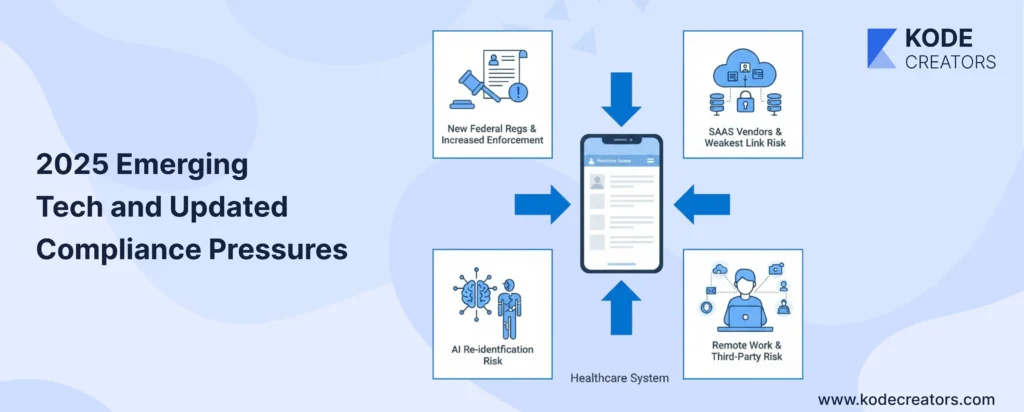

The healthcare tech stack of 2025 looks nothing like what HIPAA originally addressed. AI-powered diagnostic tools are processing millions of patient images, training on PHI, and making predictions that directly influence treatment. The compliance challenge? These models don't just store data – they learn from it, potentially encoding PHI into model weights that persist even after the original data is deleted. One healthcare startup discovered its "anonymized" AI model could reconstruct patient identities with 87% accuracy. That's a HIPAA nightmare nobody saw coming.

Cloud-native and SaaS healthcare tools have exploded, with 83% of healthcare organizations now running critical workloads in the cloud. But here's the catch: your HIPAA compliance is only as strong as your weakest vendor. That convenient authentication service, that slick analytics platform, that serverless function handling image uploads – each needs BAAs, security assessments, and continuous monitoring. FHIR APIs promised interoperability but created new attack surfaces. Every integration point is a potential breach point, and the new CMS interoperability rules mean you can't just lock down data anymore – you must share it securely.

Remote work permanently changed the game. PHI now flows through home networks, personal devices, and countless collaboration tools never designed for healthcare. Third-party risk has multiplied – the average healthcare app integrates with 23 external services. Meanwhile, enforcement is tightening dramatically. The HHS announced 45% more investigations in 2024, state attorneys general are pursuing parallel actions, and the new federal cybersecurity requirements mandate specific technical controls previously left to interpretation. California's healthcare privacy law adds another layer, with stricter requirements than HIPAA and a private right of action. The message is clear: compliance standards are rising faster than most organizations can adapt.

Core HIPAA Compliance Checklist for Healthcare Software Development

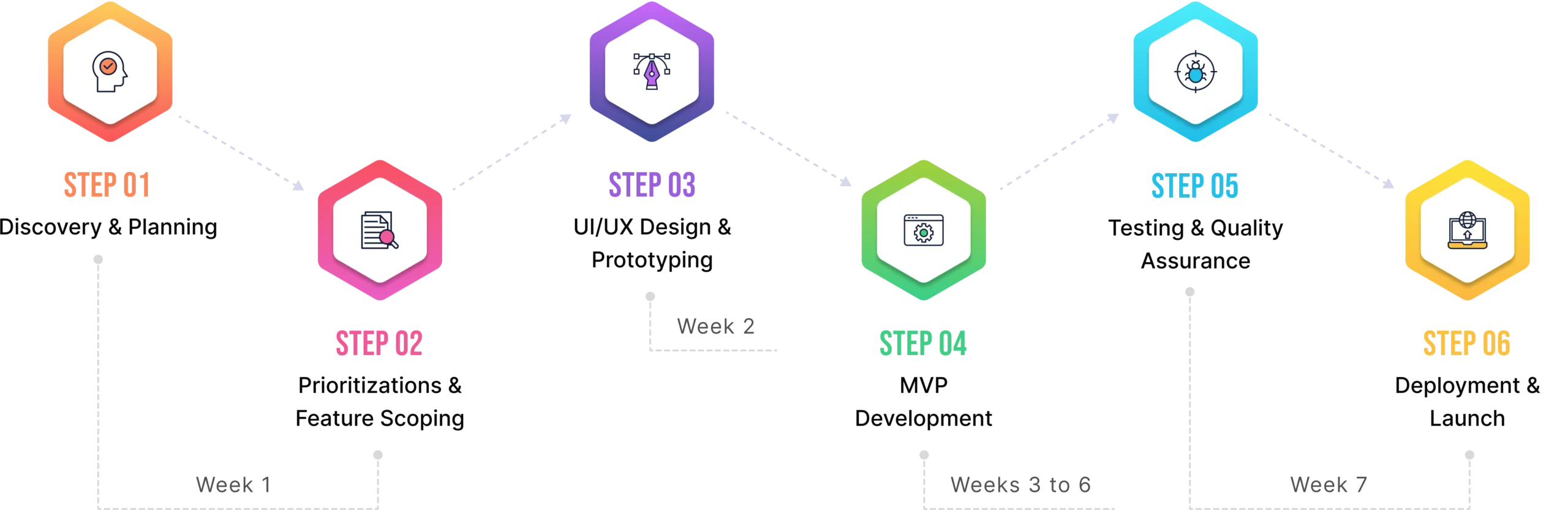

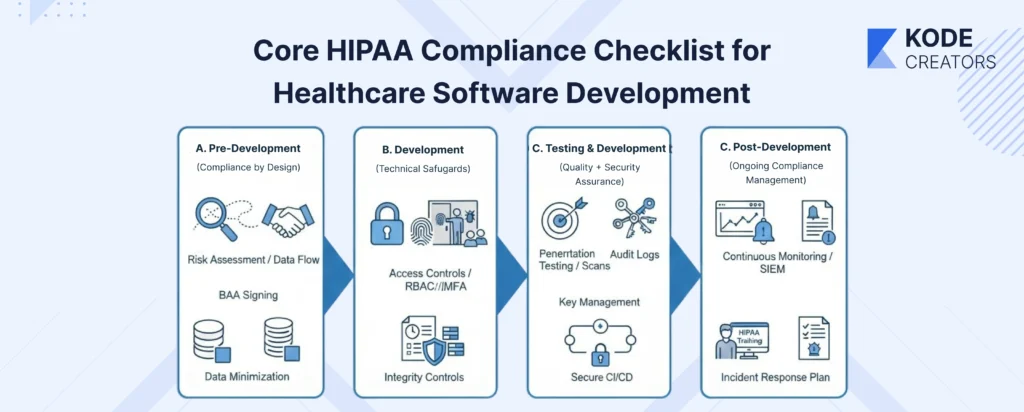

A. Pre-Development: Compliance by Design

Start with a HIPAA risk assessment before writing a single line of code. Map every place PHI will live, move, or be processed. That user profile? Contains name, DOB, and conditions. The appointment system? Reveals treatment patterns. Even your analytics dashboard could expose PHI through aggregation patterns. Document these touchpoints obsessively – auditors love documentation more than clean code.

Data flow diagrams aren't optional academic exercises – they're your compliance blueprint. Trace PHI from entry point to deletion: user inputs diagnosis → stored in PostgreSQL → synced to cloud backup → accessed via API → displayed in dashboard → archived after 7 years. Each arrow represents a compliance requirement. Missing one arrow during planning means scrambling during audits.

BAAs need signing before any integration work begins. That payment processor, cloud provider, email service, SMS gateway – if they might touch PHI, they need a BAA. Don't wait until deployment to discover AWS requires Enterprise support for BAAs, or that your perfect authentication provider won't sign one. Early BAA negotiations save architectural redesigns later.

Data minimization isn't just smart – it's required. Every additional PHI field multiplies the compliance burden. Do you really need the full SSN, or will the last four digits work? Must you store the complete medical history, or just relevant conditions? Each data point you don't collect is one you can't breach.

B. Development: Technical Safeguards to Implement

Encryption isn't negotiable. TLS 1.3 for transit (yes, 1.3 – older versions have vulnerabilities). AES-256 for data at rest, including backups, logs, and temporary files. That dev who disabled SSL for "easier debugging"? They just created a million-dollar liability. Database field-level encryption for sensitive fields adds another layer – when (not if) someone accesses your database directly, PHI remains protected.

Access controls require both RBAC and MFA, no exceptions. Doctors see patient records, billing sees claims, and developers see nothing in production. MFA isn't just for production – development and staging environments with PHI copies need it too. Session management gets tricky: token-based authentication with appropriate timeouts (15 minutes of inactivity for high-privilege users), secure token storage, and proper revocation mechanisms.

Audit logs must capture who did what, when, and to which PHI. Not just "user logged in" but "Dr. Smith accessed Patient #12345's psychiatric records at 3:47 PM from IP 192.168.1.1." These logs themselves become PHI and need encryption. Anomaly detection flags suspicious patterns – why did that inactive account suddenly download 10,000 records?

Integrity controls ensure data isn't tampered with. Implement checksums for file transfers, digital signatures for critical transactions, and version control for all PHI modifications. API security isn't just authentication – it's rate limiting, input validation, and encrypted payloads. FHIR and HL7 integrations need special attention; they're standardized but not automatically secure.

C. Testing & Deployment: Quality + Security Assurance

Penetration testing by healthcare-specialized firms catches what automated scans miss. They understand healthcare workflows and attack vectors specific to medical systems. Vulnerability scans should run continuously, not just before launch. Threat modeling examines each feature: "If an attacker compromised this component, what PHI could they access?"

Key management separates compliant systems from breach headlines. Encryption keys in environment variables? Wrong. Hardcoded in source? Career-ending. Use proper key management services, rotate keys regularly, and maintain separate keys for different environments. Your CI/CD pipeline needs HIPAA awareness – no copying production data to test, no PHI in build logs, no unencrypted artifacts.

D. Post-Deployment: Ongoing Compliance Management

Annual risk assessments aren't enough anymore – healthcare threats evolve monthly. Continuous monitoring through SIEM tools catches breaches while they're still containable. Set up alerts for unusual access patterns, failed authentication spikes, and large data exports.

Training isn't just for clinical staff. That support engineer with production access? They need to understand PHI handling. Is the contractor fixing bugs? HIPAA training before repository access. Document everything: training attendance, incident responses, and configuration changes. When auditors arrive (not if), comprehensive documentation demonstrates compliance culture, not just technical controls.

Incident response plans must be specific and practiced. Who gets called at 2 AM when suspicious activity is detected? How quickly can you identify affected patients for breach notification? Regular drills expose gaps before real incidents do. \

Common Mistakes Developers Make (and How to Avoid Them)

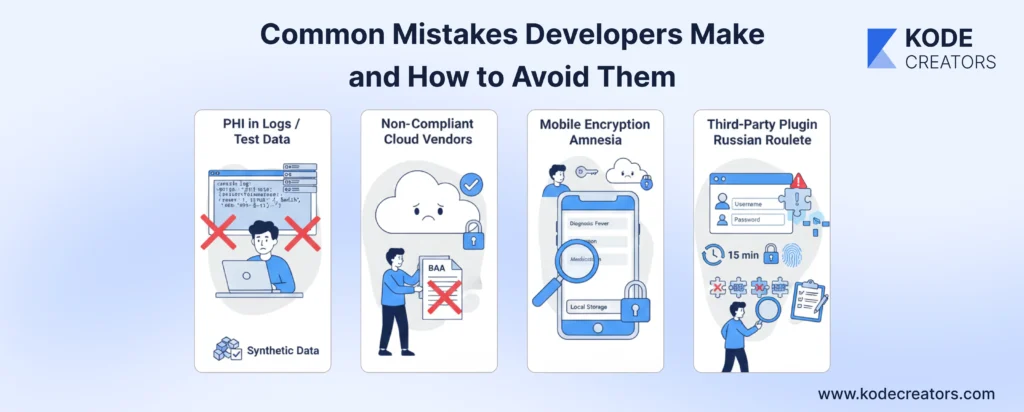

PHI in logs and test data – The classic killer. Developer adds helpful debugging: console.log('Processing patient:', patientData). Six months later, those CloudWatch logs containing thousands of patient records were breached. Similarly, copying production data for testing seems efficient until auditors find unencrypted PHI on developer laptops. Solution: sanitize logs religiously, use synthetic test data, and if you must use real data, de-identify it properly (not just changing names to "Test Patient").

Non-compliant cloud vendors – That blazing-fast CDN or convenient logging service might be perfect technically but deadly legally. Many vendors won't sign BAAs or lack healthcare-specific security controls. Azure has HIPAA compliance built in; that discount hosting provider doesn't. Always verify BAA availability before architectural decisions, not after building everything.

Mobile encryption amnesia – Mobile apps cache everything for performance. Patient data sitting in local storage, images in temp directories, API responses in memory – all unencrypted by default. iOS and Android offer encryption APIs, but they're not automatic. Implement encryption for all local storage, clear sensitive data on logout, and disable screenshots in sensitive screens.

Authentication afterthoughts – "We'll add proper auth later" becomes production reality. Missing session timeouts mean PHI stays accessible on unattended computers. Single-factor authentication invites breaches. Implement comprehensive authentication from day one: appropriate timeouts (15 minutes for high-privilege), MFA everywhere, and secure session management.

Third-party plugin Russian roulette – That handy jQuery plugin for appointment scheduling? It might be phoning home with patient data. WordPress plugins are notorious for vulnerabilities. Audit every third-party component, maintain a software bill of materials, and regularly update dependencies. One vulnerable plugin can compromise everything.

Future Outlook: Preparing for HIPAA Compliance Beyond 2025

AI governance meets HIPAA – The collision is already happening. New frameworks are emerging that treat AI models as entities that can "store" PHI within their parameters. By 2027, expect requirements for model auditing, explainability in healthcare decisions, and "PHI deletion" from trained models. Organizations building AI-powered diagnostics need to start documenting training data lineage now. The EU's AI Act already classifies healthcare AI as high-risk; similar U.S. regulations are coming.

Zero-trust becomes mandatory – "Trust but verify" is dead in healthcare. Zero-trust architecture – where every request is authenticated, authorized, and encrypted regardless of source – is becoming the standard. Microsoft's healthcare customers already report 50% fewer breaches after zero-trust implementation. Future HIPAA guidance will likely mandate zero-trust principles: micro-segmentation, continuous verification, least-privilege access, and assumption of breach.

Vendor risk management gets teeth – Third-party breaches caused 60% of healthcare incidents last year. Expect requirements for continuous vendor monitoring, not just annual assessments. Real-time API security scoring, automated BAA enforcement, and mandatory breach notification within hours, not days. Smart organizations are already building vendor risk scorecards and automated cutoffs for non-compliant services.

Automated compliance monitoring – ML models are learning to spot compliance violations better than humans. They detect unusual access patterns, flag potential PHI in unexpected places, and predict breach risks before they materialize. By 2026, automated compliance monitoring won't be optional – it'll be evidence of reasonable security measures. Organizations training these models now on their audit logs and incident data will have massive advantages when enforcement expects predictive compliance, not just reactive responses.